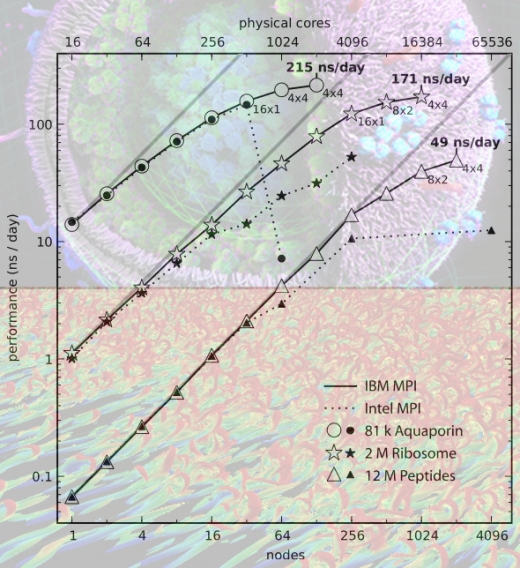

The SeRC Steering group established a new flagship program on software for Exascale simulations. The program aims to improve the performance and scalability of selected software packages by establishing new collaborative projects for exchange of expertise among research groups in the Molecular Simulations, FLOW and DPT SeRC communities. More information about the flagship can be found here.